For Jeannine Ugalde and her middle school students, using assessment data to inform classroom instruction is a regular part of the school day.

Drawing on the results of computer-adaptive tests given periodically throughout the year, the 7th and 8th grade humanities teacher and her students at Oak Valley Middle School in San Diego set classroom goals that target the areas the youngsters struggle with the most.

“It’s hard work coming up with goals, so they feel a lot more ownership of their education,” said Ms. Ugalde, whose 1,050-student school is part of California’s Poway Unified School District. “And now they specifically say, ‘Remember, you said we were going to work on this.’ ”

Jerry Chen, one of Ms. Ugalde’s 7th graders, explained.

“The data can help me make goals because I learn where my weaknesses in school are, so I know what to make my goal,” he said. “[The information] helps me find where I am and helps me set goals according to my needs.”

As more schools gain the technological knowledge and hardware to implement computer-based tests, districts are showing a growing interest in computer-adaptive testing, which supporters say can help guide instruction, increase student motivation, and determine the best use of resources for districts.

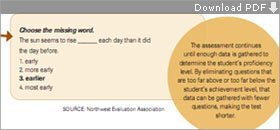

A computer-adaptive assessment is one that uses the information it receives during the test to determine which question to present the test-taker with next. For example, when a student answers a question correctly, he or she will be presented with a harder question, but if the answer is wrong, the next question will be easier. Consequently, a 5th grader taking the test could answer questions at the 6th or 3rd grade level, depending on his or her responses.

This method of testing shortens the test by not asking high-achieving students questions that are too easy for them, and likewise not giving struggling students questions that are too hard.

In essence, “each student gets questions that are appropriate just for them,” said David J. Weiss, a professor of psychology at the University of Minnesota-Twin Cities and an expert on computer-adaptive assessments.

With a computer-adaptive assessment, the questions adjust in difficulty based on the student’s previous answers. Here is how the assessment might work to determine a middle or high school student’s proficiency in basic grammar and usage.

SOURCE: Northwest Evaluation Association

“[In a fixed-form test], low-ability students are going to get questions that are too difficult, and they’re going to be frustrated,” he said. “[With an adaptive test], everybody will be equally challenged.”

In addition to shortening the length of the test, the approach creates a fairer psychological test-taking environment for each student, said Mr. Weiss, who has studied computer-adaptive tests since the 1970s.

‘Kid-Centric’ Assessments

The Northwest Evaluation Association, a nonprofit organization based in Lake Oswego, Ore., that partners with school districts to provide testing services, has created one of the most widely used computer-adaptive assessments. Called the Measures of Academic Progress, or MAP, it is used by more than 2,340 school districts in the United States, including the Poway Unified schools, and in 61 other countries.

By adjusting in difficulty based on the student’s performance, MAP makes assessment “kid-centric,” said Matt Chapman, the president and chief executive officer of the NWEA. And after administering the assessment, the teacher can see not only if the student passed a certain benchmark, but also pinpoint exactly where the student’s achievement level is—whether below, at, or above grade level.

“It increases the information you learn about the student, the student’s growth, and how the class is doing,” Mr. Chapman said of the method.

Teachers, then, can use the information “to inform instruction at the classroom level,” said Ginger Hopkins, the vice president of partner relations for the NWEA. “It allows teachers to adjust whole-group instruction and create flexible grouping,” for students at similar achievement levels, she said.

Some schools, such as the 150-student K-8 Trinity Lutheran School in Portland, Ore., have used the test to regroup classrooms based on the most efficient use of resources. Facing declining enrollment and budget constraints, the school has used MAP to reconfigure students into multiage classes, said Principal Jim Riedl.

The Chicago International Charter Schools—a group of 12 charter schools teaching 7,500 students—is using the assessment in part to evaluate the efficacy of each of the four educational management organizations, or emos, the network employs.

“It’s very high-stakes for the emo providers, and I’m not saying for our schools that are underperforming, it’s not scary,” said Beth D. Purvis, the executive director of the group of schools. “But frankly, if you’re an underperforming [school], you should be scared. It is a fair way of saying, ‘Get your act together.’ ”

Engaging Learners

Although many teachers were skeptical about the assessments when they were introduced in Poway Unified in 2001, “as students’ performance on the state test provided us with evidence that it was working, the schools came on board one by one,” said Ray Wilson, the recently retired executive director of assessment and accountability for the 33,000-student district, who oversaw the school system’s adoption of MAP.

The assessments have especially helped students on the upper and lower ends of the performance spectrum, said Linda Foote, the instructional technology specialist for the district.

In fixed-form tests, “students who are high-performing could look good all year without much effort,” she said, “and the struggling students could work and grow dramatically” but still appear to be underperforming, she added.

With MAP, “we don’t honor students who aren’t working and dishonor students who are,” Ms. Foote said.

The assessments also play a key role in increasing student motivation, she said.

“One of the most stunning pieces is that the kids, because they finally have the connection between the work that they do and the progress they make,” Ms. Foote said, are “much more willing ... to do the work.”

The approach makes students active participants in their learning, she said, and “takes the mystery out of education.”

NCLB Constraints

Still, only one state—Oregon—uses a computer-adaptive assessment for reporting purposes under the federal No Child Left Behind Act.

Because NCLB requires that students be tested on grade level, most computer-adaptive tests, which may present students with questions above or below grade level depending on how they answer previous questions, are not allowed for accountability purposes.

However, Oregon’s test—the Oregon Assessment of Knowledge and Skills, or OAKS—is an adaptive test that stays on grade level, said Anthony Alpert, the director of assessment and accountability for the state department of education.

The test was piloted in 2001, the year the NCLB legislation passed Congress, and has since grown in popularity among school districts in the state, said Mr. Alpert. Today, almost all of Oregon’s state testing is through the computer-adaptive online assessment, he said.

“The real brilliance of the founders of the online system in Oregon was making it an optional approach and letting districts be innovative in making decisions in how they’re able to participate,” he said. As an incentive to encourage districts to use OAKS, each student is allowed to take the assessment three times a year, and the state records only the student’s highest score.

Although no other state uses computer-adaptive assessments for reporting purposes under NCLB, Utah Governor Jon M. Huntsman Jr., a Republican, last month signed into law a bill that allows a pilot test of such assessments in his state.

This school year, three districts are participating in the pilot, said Patti Harrington, the Utah superintendent of public instruction. The decision to use computer-adaptive testing came from recommendations from a panel on assessment that included superintendents, legislators, parents, and state- and district-level administrators, as well as Ms. Harrington.

“We like it because it does so much more than just say whether or not a student reached a certain point,” she said. “It emphasizes growth and can be used throughout the year to inform instruction. And because it’s computer-based, teachers have the results immediately.”

Addressing the Drawbacks

But computer-adaptive assessments are not the best way to evaluate students in every situation, experts point out.

“We think that [a computer-adaptive assessment] offers certain benefits, and it has certain drawbacks,” said Scott Marion, the vice president of the National Center for the Improvement of Educational Assessment, based in Dover, N.H. “Like all testing, it really depends on what you’re trying to do, and what you’re trying to learn.”

Computer-adaptive tests don’t provide as detailed diagnostic information as standards-based assessments, said Mr. Marion.

“A score is not diagnostic. ‘You’re pretty good in this area’ is not really diagnostic,” he said. “And in the standards-based world, that’s where we really run into trouble.”

Like Mr. Marion, Neal M. Kingston, an associate professor at the University of Kansas, in Lawrence, and a co-director of the Center for Educational Testing and Evaluation, also has concerns about computer-adaptive assessments.

“Item-response theory, or the mechanism used to determine which items are easier or harder, ... assumes there’s a universal definition of hard and easy,” he said. In some subjects—reading, for instance—that assumption may hold, he said, but for other subjects—such as high school math, which may combine algebra and geometry questions—that assumption isn’t always correct.

“The adaptive-testing model assumes that everyone has taken [courses] or learned [subjects] in the same way,” which is not always the case, Mr. Kingston said.

Subjects such as social studies, in which the curriculum varies greatly from place to place, present a particularly difficult challenge for computer-adaptive tests, which are often created on a national or state level, he said.

“I think that the companies that provide these kinds of tests have an obligation,” Mr. Kingston said, “to provide more technical information than I have seen them do in the past about the appropriateness of the models that they’re using to determine whether it’s the same test in rural Kansas as it is in the center of New York City.”

Both Mr. Marion and Mr. Kingston say they recognize the potential of computer-adaptive testing, even as they voice caution.

“It’s a pretty nice framework for making certain types of tests for certain purposes,” said Mr. Marion, “but the promises—from what I’ve seen—far exceed the practice.”