In “Straight Talk with Rick and Jal,” Harvard University’s Jal Mehta and I examine the reforms and enthusiasms that permeate education. In a field full of buzzwords, our goal is simple: Tell the truth, in plain English, about what’s being proposed and what it means for students, teachers, and parents. We may be wrong and we will frequently disagree, but we’ll try to be candid and ensure that you don’t need a Ph.D. in eduspeak to understand us. Today’s topic is artificial intelligence.

—Rick

Rick: Let’s talk AI. It’s been a year and a half since ChatGPT was unveiled, which means we’re past the initial fixation on how artificial intelligence will end the world. In education, we’ve now moved onto more traditional pursuits: an admixture of enthusiasm for AI’s “transformative promise” and concerns about cheating, access, and privacy.

I feel contractually obliged to start with my ed-tech mantra: It’s not the technology that matters—it’s what we do with it. And we’ve a pretty lousy track record on that count. Larry Cuban has aptly noted that, from the radio to the computer, ed. tech has long been “oversold and underused.”

But that’s not an iron law. Technology can be transformative. As Bror Saxberg and I observed many years ago, some once-novel technologies—like the book and the chalkboard—did have transformative effects on learning by making it cheaper and more customizable, accessible, and reliable. The book democratized knowledge and expertise. The chalkboard gave teachers new ways to illustrate ideas and explain math problems.

There is obvious potential here, from AI-fueled technologies like tutoring systems to AI-enabled high-quality support for lesson planning and assessment. When it comes to tutoring, for instance, one of the age-old challenges is a dearth of trained, reliable tutors. Well, it doesn’t take a lot of imagination to see how (in theory) 24/7, reliable, personalized AI-powered tutoring could have a profound impact.

The actual benefits, of course, will depend on how AI is used and whether most students can actually learn effectively from a nonhuman mentor, and that’s all very much to be determined. But I want to focus on a seldom-explored dimension here: the ways in which AI seems to be a different kind of tool that’s disorienting and that could erode the relationship between students and knowledge.

Now, this isn’t about whether AI will be good for the economy. After all, some tools are good for productivity but lousy for learning. The development of GPS, for instance, made it far quicker, easier, and more convenient to find our way around. (Anyone who remembers wrestling with crumpled maps in the passenger seat knows what I mean.) There was a cost, of course: GPS ravaged our sense of physical geography. Because geography doesn’t loom especially large in schooling today, the impact of GPS on education has been pretty modest.

When it comes to AI, though, I fear things are much more disconcerting than they are with a GPS. We already have a generation of students who’ve learned that knowledge is gleaned from web searches, social media, and video explainers. Accomplished college graduates have absorbed the lesson that if something doesn’t turn up in a web search, it’s unfindable. And we’ve learned that few people tend to fact-check what they find on the web; if Wikipedia reports that a book said this or a famous person said that, we tend to take it on faith.

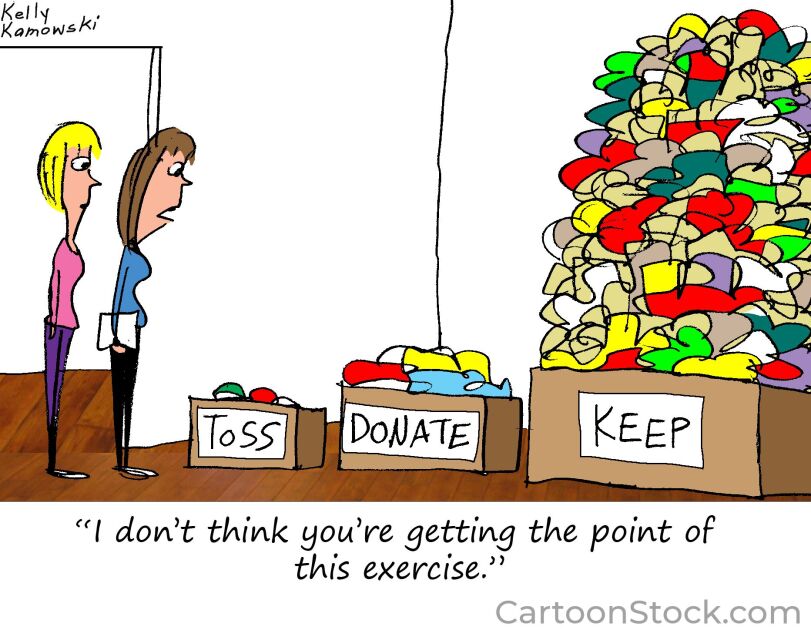

Jal: Yep. Especially with young people who are learning to do something for the first time, there is a real risk that AI will replace the hard work of learning how to write, design, or program. Tools like AI are at their best when they complement human intelligence and skill, but those human capacities develop slowly and often painfully, which is why shortcuts are so tempting but ultimately misguided.

Learning environments often take away some of these tools to force students to concentrate on learning the skills they are being taught. For instance, laptops are banned from many Harvard Business School classes because computers provide too many interruptions and professors want students to engage in case discussions without distractions. When I was learning to ski as an adult, my instructor forbade me to use poles until I could get down the mountain without them. When we teach pitching in baseball, we often start the kids on one knee so that they can get the top half of the body right before they worry about the legs. So, there is a long tradition of restricting the learning environment so as to focus on the actual skills that we want to teach.

Teachers can do that in school, but the use of ChatGPT and Microsoft Co-Pilot for homework is, based on what I can see, absolutely rampant. And here I think AI is revealing an old problem rather than creating a new one: There is so much work that just has to be completed, but students see little purpose or meaning in what they are doing. TikTok is awash with videos about HOMEWORK—Half Of My Energy Wasted On Random Knowledge. In many classes, kids do so many worksheets that teachers don’t have time to mark them. If this is what learning is like—particularly if the assignments are mainly asking for summaries of factual knowledge—AI is going to be the solution. But if kids had fewer assignments that connected to a purpose they saw as relevant, and if more of that work were original rather than the same recycled assignments year after year, then perhaps we would see more original thinking and less AI-generated content.

Rick: Agree. Agree. Agree. It strikes me that AI is simultaneously creating powerful ways to bolster instruction, threatening to erode learning, and automating pointless routines. One of the problems is that all of this typically gets scrambled together in the public mind, along with confusion about how AI works—making it tough to think rigorously about risks and benefits.

Given that, I want to flag three points. First, as we’ve noted, AI could prove to be a useful tutor. Experience suggests, though, that few students actually access tutors unless the time is deliberately scheduled and monitored. And there are real practical questions about how well tutoring will work for learners if it’s devoid of human interaction. So, we should be duly measured about the promise of “a tutor in every pocket” until we see more.

Second, the folks working on AI in schools consistently tell me that one of the biggest near-term opportunities is for AI to be a support system and a time-saver for educators. It can help streamline the IEP process so that special educators spend more time educating. It can help with lesson planning, slide decks, and reporting, allowing teachers to focus more on students and less on paperwork. The problem is that AI is only as good as the material it’s been fed. After all, AI doesn’t actually “know” things; rather, it predicts the next word in a sentence or the next pixel in a picture based on the data it’s been trained on. That’s why it can be hugely unreliable—explaining the annotated bibliographies filled with nonexistent articles and the legal briefs citing nonexistent case law. If teachers are relying on ChatGPT to construct lesson plans or instructional materials, they are unintentionally wading into waters rife with huge concerns about accuracy, reliability, and bias.

Third, given these issues with trustworthiness, we should be far more nervous about AI becoming a default resource for learners. For this reason, the most famous works of dystopian fiction may not have been bleak enough about the risks posed to human knowledge and free thought. After all, in 1984 and Fahrenheit 451, the book burners have a tough job. They have to bang on doors and comb through archives in their attempt to stomp out “thoughtcrime.” The emergence of a few giant, poorly trained AI systems could make thought policing easy and breezy: Tweak an algorithm or produce a deepfake and you can rewrite vast swaths of reality or hide inconvenient truths. And it’s all voluntary. No one needs to strip books from shelves. Rather, barely understood AI is obliquely steering students toward right-think.

The legacy of social media is instructive. Twenty years ago, there was a lot of enthusiasm for all the ways in which the democratization of the internet was going to promote healthy discourse, mutual understanding, and knowledge. That mindset was particularly evident in education. Things didn’t turn out quite the way we hoped. I’m hoping we’ll do better this time.

Jal: Great points. A couple of things to pick up on. Will tutors work in the absence of human interaction? It depends on who the learners are and, more importantly, whether they want to learn the subject. David Cohen and I wrote a paper a few years ago that argued that reforms succeeded when they met a need that teachers thought they had. The same is true for students. Khan Academy has been a godsend to high school and college students whose teachers and professors are bad at explaining things. However, if a student is struggling in a compulsory subject and doesn’t really want to learn it, then offering them an AI tutor is not likely to fix things.

Your point about social media is compelling. We’ve gone from the nascent internet promising to democratize knowledge to a much more developed social media ecosystem causing a national panic about what it does to teenagers. Unlike, say, the CD-ROM, AI is not a “thing” that schools are trying to get students to use. It is an all-pervasive technology that will change many aspects of life—and schools, like other institutions, have no choice but to figure out how to respond. Because the technology is rapidly evolving, it’s often not going to be clear what the right answer is. Is it OK for AI to write a Valentine’s Day message? A sermon? It’s not just schools that have a lot to figure out.

Given that, I have one suggestion: Let students in on the conversation. Let them think about when it is OK to use AI—for instance, as a starting point to generate some ideas for writing—and when doing so would impede skill development or create unfairness. Have students draft guidelines about the uses of AI in school, and as they do so, let them read the work of the various task forces and commissions that are pondering the same questions. Not only would this turn AI into a teaching moment, it also promises to have students feel a sense of ownership over AI guidelines. Students are much more likely to follow guidelines that they help develop rather than ones that were imposed upon them by adults.

AI is here to stay. We need to figure out ways to channel it in ways that serve human ambitions, and students should play a part in that process.